A journey of thousand miles starts with a single step.

Building a complex system which is scalable, provides fault tolerance with other cool stuff also starts with something very simple.

Single Server Set Up :

First we will be starting off with single server means there will be only two component, One is Server and another is Client that's all. If you are thinking about where will be databases, the database will also reside within the server.

So when client request to the server, server fetches the relevant data from it's internal DB and compute within the server and responds back with a adequate response. But as you can see, we are having a big problem here with this set up and that is Single Point Of Failure(SPOF). Simply put if your server goes down then your entire website goes down with it and if worse that your server's DB got corrupted then you will lose all the data and many cases it is unrecoverable.

Individual machine for Server & Database :

To mitigate the above scenario, we can separate the Server and Database into different machine(VMs). Then all traffic will be going through Server and whenever it needs some data from DB it can access it over a network call. By implementing this infrastructure, we can scale our Server and Database independently. So if your website is getting lots of traffic, you can just add more VMs to the server and vice versa.

There are two types scaling : *

Vertical Scaling : If we are adding more resource(CPU,RAM,GPU) to one machine only then it's called vertical Scaling. As your loads increase the you will be adding more and more resource to one machine only. But there is hardware limit we need to think of in this scenario as currently one machine can only have limited amount of resource.

Horizontal Scaling : Instead of adding more resource to one machine, we adding multiple machine with required amount of resource as Server. So as the load increases we will be only adding more machine as a Server.

Ideal Solution for scaling will be hybrid of Vertical & Horizontal scaling where we adding machines with medium resources when the traffic increases. We will talk about pros and cons of VS & HS in some other article.

Load Balancer :

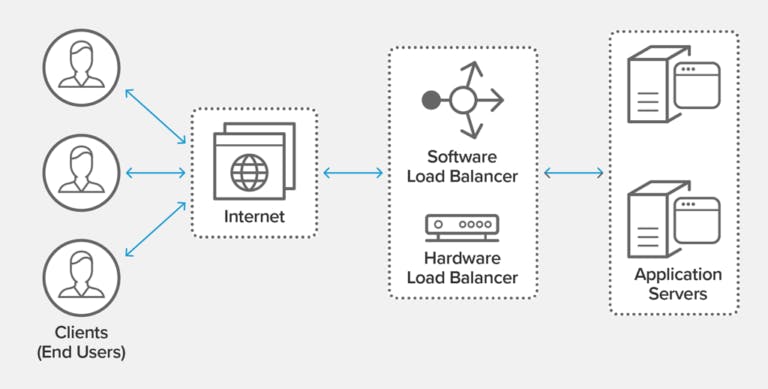

The need of load balancer increases as we scaling our servers because when the traffic increases we need to ensure that all the traffic aren't going to only one server. In that case due to overload, the server's response time will be increases and eventually crashes.

A load balancer is machine itself whose work is to evenly distributes incoming traffic among all web servers that are defined in the load balancer set.

So, lets see how load balancer works behind the scene.

Normal Flow :

When a client goes to website and hit a domain name(eg. abhisekh.in) then the first thing happens is your machine send a DNS request to DNS server asking what is the IP of the "abhisekh.in". Once DNS provides the IP(Server IP), then browser sends an HTTP request to that IP and that IP(which is the server itself) react with a valid response. This is how normal website works. But if we are using load balancer then there will be another level in between of DNS and the actual web server.

Load balancer Flow :

Client hits a domain name, DNS request goes to DNS server and DNS server responds with the IP of the load balancer then your browser hits the load balancer then the load balancer sees the request & redirects your request to one of the web server and then web server receives the request & send a response to load balancer and then it forward that response to you.

To keep the security tight, we should place the web server in the private network(local) of the load balancer so only load balancer can access over private IP and not exposed to outer world. Private IPs are IP addresses which are reachable only between machines within same network.

To keep the article short, we will be discussing other fundamentals in upcoming blogs. (eg : How to handle database failures, Cache, CDN etc..)

Additional Study Material : Load Balancer in Depth